Oracle EPM, Analytics, Hyperion and other stuff.

Guillaume Slee's EPM/BI blog. Does exactly what it says on the tin.

Friday, 1 April 2022

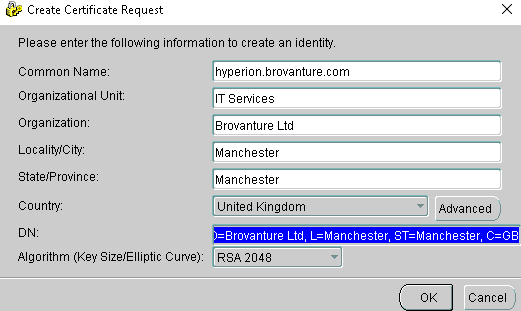

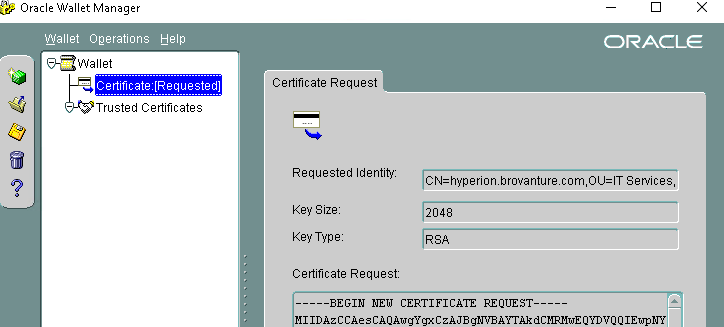

How to Create an Oracle Wallet SSL Certificate Request Containing Subject Alternative Name?

Tuesday, 15 March 2022

EPM 11.2.8 FDMEE ODI Upgrade Issue

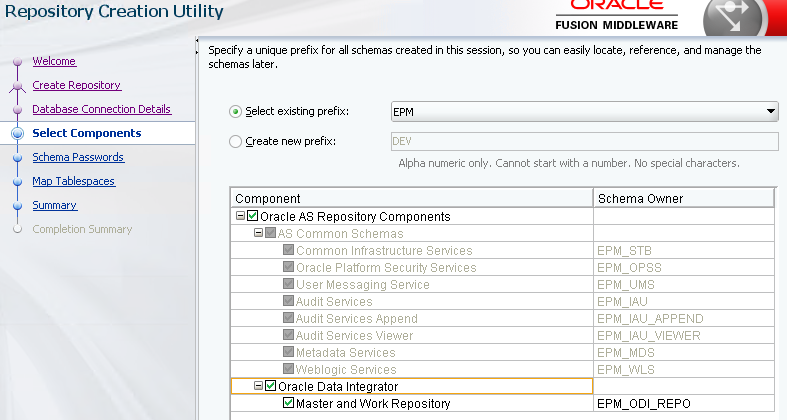

I encountered an issue this week when upgrading an 11.2.2 EPM environment to version 11.2.8.

Note that is is only an issue when using an Oracle database and when upgrading from a version older than 11.2.5.

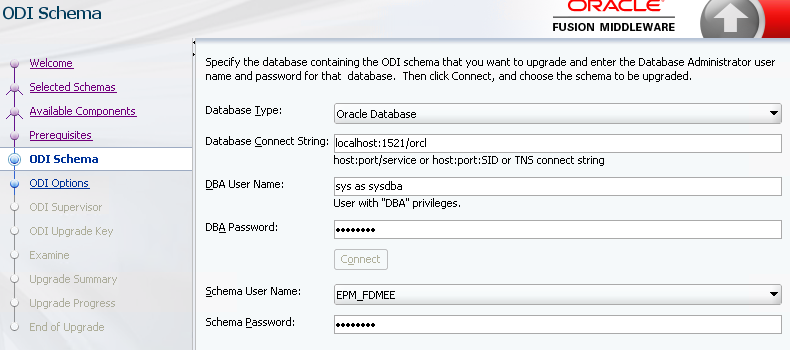

The problem occurred at the "Manual Configuration Tasks for FDMEE" task where you run the RCU Upgrade Assistant (UA.bat) to manually upgrade the version of ODI used by your FDMEE instance. I got the following errors:

- The specified database does not contain any schemas for Oracle Data Integrator or the database user lacks privilege to view the schemas.

- Cause: The database you have specified does not contain any schemas registered as belonging to the component you are upgrading, or else the current database user lacks privilege to query the contents of the schema version registry.

- Action: Verify that the database contains schema entries in schema version registry. If it does not, specify a different database. Verify that the user has DBA privilege. Connect to the database as DBA.

- ODI-10179 / ODI-26168: Client requires a repository with version 05.02.02.09 but the repository version found is 05.02.02.07

- The "05.02.02.07" corresponds to ODI Version 12.2.1.3.0.

- And "05.02.02.09" is for Versions 12.2.1.3.190708, 12.2.1.4.0 and 12.2.1.4.200123.

Wednesday, 1 December 2021

OAC - Using CLI to Automate Starting-Stopping of Instances

A couple of years ago I blogged on how to start and stop Oracle Analytics instances using the REST API. This enabled you to schedule scripts to manage the uptime of your instances so you can save those valuable oracle credits. This worked well for the old Autonomous instances but those scripts no longer work with the newer Gen2 OAC architecture. In this blog I’ll show how to use the Oracle CLI (Command Line Interface) to control your instances.

The Oracle CLI is very simple to use, requires no coding skills and has the added bonus of being able to manage most of your Oracle Cloud Infrastructure tasks (e.g. managing DBaaS instances or virtual machines etc).

Pre-Requisites

Installing the Oracle CLI on Windows

- Download a powershell script

- Run the powershell script.

- This will download the Python and install it.

- It will also download the CLI and install it.

OCI CLI Setup

Add the Keys to your IAM User Account

Stopping and Starting OAC using the CLI Command Line

Other Oracle CLI Commands

Wednesday, 27 October 2021

EPM Cloud - Import Hybrid Snapshot into Non-Hybrid Pod

If you’ve ever tried to import a EPM Planning hybrid-enabled application snapshot into a non-hybrid EPM pod you will have seen this error:

EPMLCM-14000: Error reported from Core Platform.

Unsupported migration of artifacts and snapshots for Hybrid PBCS application from (Service Type ENTERPRISE with Hybrid support) to (Service Type LEGACY without Hybrid support).

Download the Snapshot

Edit the Application Definition.xpad File

Edit the Application Settings.xml File

Monday, 23 August 2021

EPM Cloud - Make the User Experience Simple & Intuitive with Direct Links

One great new feature which came out recently in the Oracle EPM Cloud is the ability to download direct links to any EPM Cloud navigation flow card or cluster. This includes links to both the default cards and custom cards and clusters which you have created. Task lists can now be super simple for the end user. Instead of a War and Peace list of instructions on how to navigate to User Preferences or how to open up Data Integration just give them a direct link!

How to access the EPM Cloud direct links?

Login to your EPM Cloud instance. In the top-right click on the down arrow next to your username and click on ‘Export URLs’:

This will download a .CSV file which is pipe delimited:

Adding the URL to a Task List

You can add any number of task list URL links to aid the end users. Some very useful ones could be User Preferences and Variables for a brand-new roll-out.

You can copy the URL and paste it into a URL type task list, here I’ll use the User Preferences card:

The task will look like this in their Task List:

Clicking on the task list will open up the cluster/card/tab in a new browser tab:

Here’s another example with Data Integration. Copy the Data Integration URL from the downloaded file:

Create your task list item:

Click on the task list and the Data Integration card opens up directly in a new browser tab. If you added instructions to the task list it also means the user can navigate back and forth between the tabs to read the instructions associated with the task.

Here is a link from the EPM Cloud youtube channel which demos how this functionality can also be used to streamline the user experience across the Oracle suite by embedding EPM content into Oracle ERP and Oracle NetSuite: https://www.youtube.com/watch?v=qJirlSZpTq4

In the next post we'll make things even slicker with a little touch of Groovy and the REST API.

Thursday, 4 February 2021

EPM Integration Agent - MS SQL Server Windows Authentication

If you have tried to use Windows authentication in the database connection details for your Oracle EPM Integration Agent on-premises data source you may have come across these errors:

Error: com.microsoft.sqlserver.jdbc.SQLServerException: This driver is not configured for integrated authentication

com.microsoft.sqlserver.jdbc.SQLServerException: Login failed for user

So, how DO you use a Windows username and password with an EPM Integration Agent target?

mssql-jdbc_auth-9.2.0.x64.dll

You need to add the mssql-jdbc_auth-9.2.0.x64.dll to your JRE\bin and JRE\lib folder. This is the library used by the MS SQL jdbc driver for Windows authentication.

You should be able to find the library in the .zip file you downloaded containing the MS SQL jdbc driver (sqljdbc_9.2.0.0_enu.zip\sqljdbc_9.2\enu\auth\x64).

Restart the agent and it should now be ready to use Windows authentication. But WAIT, there's one additional step...

integratedSecurity=true

You'll need to add an additional parameter to your jdbc connection string to let the driver know that you are using Windows authentication and not native MS SQL authentication:

jdbc:sqlserver://server:port;databaseName=dbname;integratedSecurity=true

Et voilà! The EPM Agent will now login to your MS SQL Server database using a Windows user account for authentication.

Happy integrating :)

Wednesday, 16 December 2020

EPM 11.2 - How to Start OHS Remotely?

In the on-prem Hyperion EPM world it’s common to have one script sitting on your primary Foundation server which can start and stop all the services across all of the host machines in your environment. In 11.1.2.x this was simple because every process had a Windows service associated with it and we could use the Windows SC command to start a Windows Service remotely on another machine.

In 11.2.x there is no Windows service for the OHS component which needs to be started via the command line. ThatEPMBloke wrote a service wrapper to create a service but some customers don’t allow custom code in their environments. So in a clustered EPM 11.2.x environment how can we start our second instance of OHS from our primary Foundation server?

OHS Node Manager to the Rescue!

TLDR;

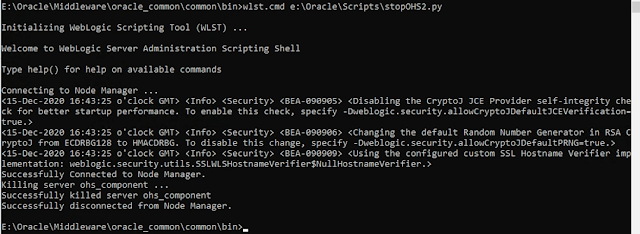

To put it simply we use the weblogic scripting tool wlst.cmd on the primary OHS server to connect to the node manager on the second OHS server and start OHS remotely.

Detail:

When you install OHS with the EPM installer it configures OHS as a standalone node. OHS needs the local OHS Node manager service running in order to start. By default each node manager instance only listens on localhost (you can’t connect to it remotely) but we can tweak the node manager Windows service to listen externally which enables us to connect from the primary node to the secondary node manager and start OHS on the secondary node.

Alter the Node Manager Listen Address

On the second OHS server:

- Stop OHS using the command line command stopComponent.cmd ohs_component

- Stop the OHS Node Manager Windows service

- Open up the Windows registry at:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Oracle Weblogic ohs NodeManager (E_Oracle_Middleware_ohs_wlserver)\Parameters

- Edit the CmdLine parameter and find the -DListenAddress='localhost' in the string

- Change the ListenAddress so that it listens on the hostname rather than localhost e.g. -DListenAddress='Hostname2' This enables us to connect to the node manager remotely.

- Start the Node manager Windows service on the second OHS server

Create a Super Simple Python Script to Start OHS on Server2

We can now write a simple python script on the primary OHS server to start OHS on the secondary OHS Server.

startOHS2.py:

nmStart(serverName='ohs_component', serverType='OHS')

nmDisconnect()

- nmConnect() - this command connects to the OHS node manager on hostname2

- The user name and password are the Weblogic Admin credentials

- The hostname is the servername of the second OHS server

- 5557 is the default port that OHS NodeManager runs on in EPM installations

- nmStart() - this command starts the OHS 'ohs_component' server on hostname2

- nmDisconnect() - disconnects from NodeManager

Points of Interest

This works nicely, however it stores the password in plain text which isn't great. You can alter the nmConnect() command to use userConfigFile and userKeyFile parameters to store the encrypted user credentials.

Another point to make is that your usual "startComponent.cmd ohs_component" script won't work any more. This is because the script tries to connect to nodemanager on localhost instead of the hostname. The simple solution is to use the same startOHS2.py script on your second OHS server.

Hope you all have a safe and merry Christmas!