The objective of this blog is to describe what the OAC Remote Data Gateway is, how it is configured and how to us it to connect an OAC instance to an on-premises MS SQL Server database (although the process will be the same connecting to any other on-premises data source).

The Oracle Analytics team have released a utility called the ‘Oracle Analytics Cloud Data Gateway’ which enables OAC to easily connect to your on-premises datasources with minimal fuss and WITHOUT HAVING TO OPEN UP FIREWALL PORTS!

No more awkward conversations with your network admin. No more grovelling and bribing them to open up ports in their firewall.

Current supported data sources are:

- Oracle database

- SQL Server

- DB2

- Teradata

The Oracle A-Team have written a blog

here on what it does and how to configure and there is also a useful youtube video

here.

What is the Oracle Analytics Remote Data Gateway?

The Data Gateway is a replacement for the Remote Data Connector which is the OAC utility to connect to your on-premises data sources. The RDC required you to deploy a webapp to your own install of Weblogic or Tomcat which can be a challenge to those not familiar with web app deployments. The RDC is still supported but it is depreciated and will no longer receive any updates. Installing Data Gateway is super-simple. It uses Jetty which is a self-contained Java HTTP web and servlet container. Your Data Gateway app is already deployed for you. All Data Gateway requires you to do is extract the zip file if on Windows or install if on Linux. I went for the Windows option.

Why don’t I need to open up my firewall? What is this Voodoo?

The honest answer is I don’t know! It uses only one-way traffic (client to server) and I’m guessing this is how it works: The Data Gateway client will repeatedly poll your OAC instance over HTTPS on a random internal port which means the connection is initiated from the Data Gateway client to the OAC server (no inbound request from OAC so opening of firewall ports not required). If the OAC server requests data then the polling client will pick up the request and send the data up to OAC. I did a network trace and there are little TLS [Client Hello], [Server Hello] handshakes occurring every second so this ensures that the connection is kept alive.

Installing Oracle Analytics Cloud Data Gateway on Windows

The install is nice and simple. Download the OAC Data Gateway 105.3 binaries for Windows from

here and just extract the zip file to any location. There isn’t actually any install required. Here is what the folder structure looks like:

Oracle Analytics Cloud Data Gateway Configuration and Techy/Geeky Stuff

I always like to know what is going on in the background so I thought I'd have a sniff around the installation and configuration.

The configuration is simple, I won’t repeat what our friends in the A-Team have published so have a look

here for details. Here are the steps:

- Extract the Datagateway folder from the zip file you downloaded from Oracle tech network

- Launch datagateway.exe

- Enter your OAC URL

- Generate the security key

- Register a new Data Gateway agent in your cloud OAC instance. Navigate to Console -> Remote Data Connectivity screen

Here is the config screen of my Data Gateway configuration screen:

URL: Here you enter the URL to your OAC instance.

Allowed Hosts: Here you can add a whitelist of server names/IPs you will allow the Data Gateway to connect to. If you leave it empty Data Gateway can connect to any address in your data centre.

Allowed SQL Statements: Enter the types of statement allowed to be executed on the target DB. Currently only select is supported.

This is what my Data Gateway agent looks like when it's registered in the OAC 'Remote Data Connectivity' screen:

Ok, now it's time to hack around the install.

When you click on save in the Data Gateway client window the configuration gets written to a properties file: \datagateway-Windows-105.3\obiee_rdc_agent.properties

Here is whats in the file:

#Wed Jul 17 12:17:47 GMT 2019

rdcURL=http\://localhost\:8641/datagateway/javads

hostWhitelist=

enableLongPoll=1

poolThreadInterval=500

oacURL=https\://dmcbi-dmcgrp.analytics.ocp.oraclecloud.com

proxyURL=proxy-url

numberOfJobPollers=2

agentID=4b81ce5f-2e09-46f1-8451-d250ee999g1c

proxyUserName=

numberOfJobsToFetch=100

proxyHost=

proxyPassword=

statementWhitelist=

numberOfJobExecutors=100

proxyPort=

Pick a port, any port...

Notice the random port number chosen by the configuration is 8641. If you change the config then the port number will change every time you click save. If you wanted you could fix the port number if you wanted by editing the .properties file. Also notice that you can add proxy server information in this file.

Out of curiosity I tried connecting to the URL using a browser and saw this information:

Clicking on the config.jsp link opens up the config screen but over the web (just like you do on the Linux version):

There is also an interesting rdcagent page which I’m assuming will be used once Oracle get the RPD java datasource configuration working (more on this at the end of this blog)

http://localhost:8641/datagateway/static/rdcagent.js

Unfortunately there isn’t a Windows service which means that Data Gateway will not start up again automatically after a reboot of the OS. To launch the Remote Data Gateway you need to execute the dataGateway.exe manually, this then starts up the Java process for the webapp. I’m hoping Oracle will provide instructions on how to enable Data Gateway as a Windows service. When you launch datagateway.exe you will see several instances of that process in Windows Task Mgr, you will also see the Jetty java.exe process running in the background.

I've no idea why so many run in parallel.

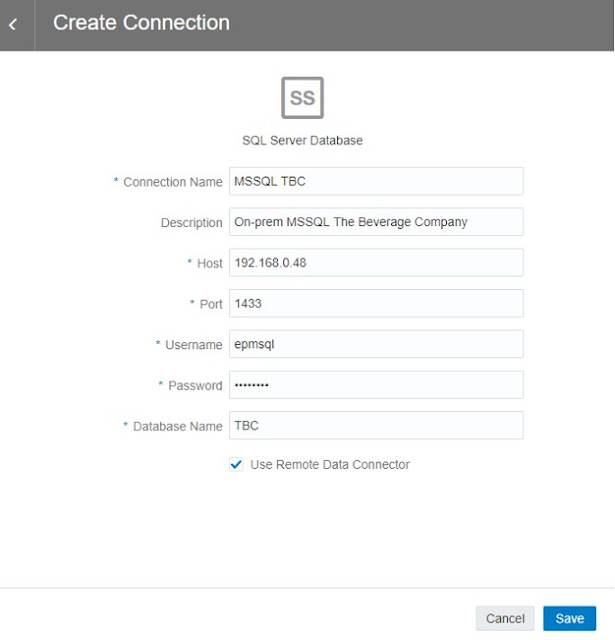

Creating a MS SQL Server Connection

The objective of this blog is to connect to an on-premises Microsoft SQL Server database.

Once you have configured the Data Gateway agent you can then create the connection to your on-premises MS SQL Server database.

In OAC navigate to Create -> Connection:

Enter the on-premises database host you want to connect to, the userID and the database name.This is a big plus for Data Gateway compared to older RDC. With the RDC you had to add a jdbc connection to a config file on the RDC client. With Data Gateway all connections are managed from within your OAC instance.

Eagle-eyed Essbase users will also notice that this is connecting to a SQL version of the Essbase classic ‘The Beverage Company’ (TBC) database :)

Creating an OAC Data Set

You can create an OAC data set graphically by selecting tables/columns manually. This is very simple and any end user (no SQL knowledge required) can define the data they want to analyse. The downside is that you can only select one table per data set if done graphically. You would need to create an individual data set for each table and then join them together by either using an OAC Dataflow or by adding all the data sets to a project and then joining the data sets together in the data diagram.

People that understand SQL statements can by-pass this and create only one data set using an SQL Statement using joins which combines the data from all tables. This will obviously be more efficient but requires more technical knowledge. OAC really does give you the best of both worlds, from basic end user to SQL jockey. I’ll show both ways.

For this example my TBC SQL database consists of a SALESFACT table and dimensional tables MARKET, PRODUCT, SCENARIO, SUPPLIER.

Creating a Data Set Graphically

Okay, this is the easy way to create your data set without writing any SQL code. The downside is that you need to create one data set per table. You can write a SQL statement to join all your tables into one data set which is a lot more efficient but might not be simple for non-technical users. OAC gives us the option to do both. Scroll further down to see the SQL statement example.

You can create a data set by selecting the schema from your MS SQL Connection:

Data Gateway will then connect directly to your on-premises database and prompt you to select which schema you want to connect to, dbo in our case. Notice the 'select columns' and 'Enter SQL' radio buttons. Choose 'select columns' for the graphical mode:

Once you have selected your schema you can select which table and columns you want to add to your data set.

Here I have created a data set called TBC_MARKET (it just says MARKET in the screenshot) consisting of the STATEID column and the STATE column. When you save your data set OAC will populate that data set with the data directly from your on-premises MS SQL source.

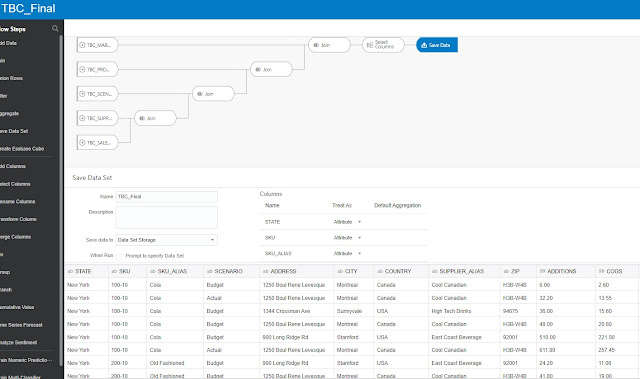

I then go through the same process for all my other tables, one data set per table and end up with 5 data sets:

My TBC_SALESFACT data set looks like this:

My dimension columns consist of foreign keys so any reports will be meaningless. I need to join these keys with the other dimension tables to give me some meaningful results.

I can do this two ways, using a data flow to create a final data set or by adding ALL the data sets to a project and then joining the datas sets together in the data diagram.

Joining the TBC data sets in the data diagram of a OAC Project:

Alternatively I can join the individual TBC_* data sets using a OAC data flow and saving to a new TBC_FINAL data set:

Both result in being able to visualize my data using meaningful member names:

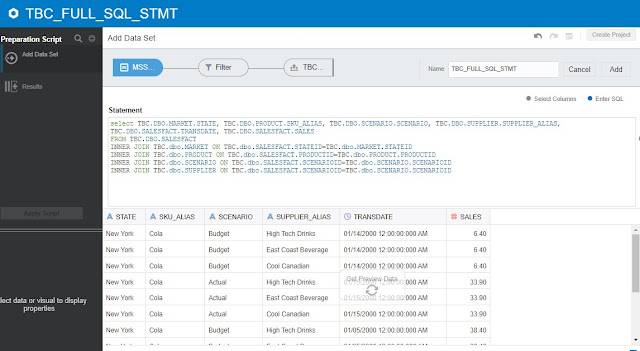

Creating a Data Set using SQL

This is a lot more efficient because we can control the exact SQL we invoke to the on-premises SQL server and it will join all of the tables into one data set instead of having to join several data sets via a data flow or via the data diagram.

This is the SQL statement containing my joins which defines the data I want to bring into OAC:

select TBC.DBO.MARKET.STATE, TBC.DBO.PRODUCT.SKU_ALIAS, TBC.DBO.SCENARIO.SCENARIO, TBC.DBO.SUPPLIER.SUPPLIER_ALIAS, TBC.DBO.SALESFACT.TRANSDATE, TBC.DBO.SALESFACT.SALES

FROM TBC.DBO.SALESFACT

INNER JOIN TBC.dbo.MARKET ON TBC.dbo.SALESFACT.STATEID=TBC.dbo.MARKET.STATEID

INNER JOIN TBC.dbo.PRODUCT ON TBC.dbo.SALESFACT.PRODUCTID=TBC.dbo.PRODUCT.PRODUCTID

INNER JOIN TBC.dbo.SCENARIO ON TBC.dbo.SALESFACT.SCENARIOID=TBC.dbo.SCENARIO.SCENARIOID

INNER JOIN TBC.dbo.SUPPLIER ON TBC.dbo.SALESFACT.SCENARIOID=TBC.dbo.SCENARIO.SCENARIOID

We create a new data set just like we did in the previous section but we select the 'Enter SQL' radio button to enter our SQL:

I can now create a project on that one data set and visualize my data. Nice and easy and FAST!

Cached or Live Data?

There is an option in each direct database connected data set to either cache the data or have a 'live' connection. I tested the Live connection, added a new row to my on-premises database and the new row appeared immediately in my OAC project. It does work! Caveats on caching: there is a limit to the size of data held in the cache and you can't modify that setting yet in the OAC console. Also, I don’t know how often the cache is refreshed.

For large data sets where live data isn’t a requirement I would probably create a data flow and schedule to run overnight to a new target data set. The data wouldn’t be live but it would ensure fast data retrieval times.

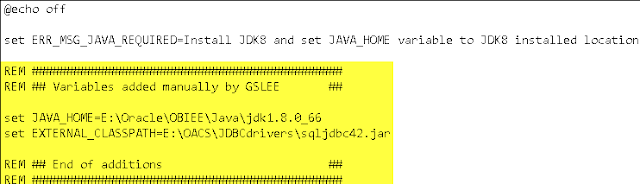

Adding your Data Gateway Connection to the RDP

Edit 29/11/19: Ignore this section, with OAC update 105.4 you can now easily connect to Data Gateway using your RPD! Go here to read all about it.

Ok, I admit defeat here. I don't think there is a way to add the current Windows version of Data Gateway as a java datasource to the RPD. This works in the older Remote Data Connector. The Data Gateway config documentation seems to have been copied from the RDC docs.

In the Admin Client you are meant to go to File -> Load Java Datasources and connect to your Data Gateway. This internally connects to http://hostname:port/obiee/javads . There isn't a 'obiee' webapp present on the Windows version of Data Gateway so this must be a work in progress. Fingers crossed it should be added soon.

If I'm wrong or I find out more I'll update the blog.

Conclusions

Here is a list of positives and negatives of the current release of Data Gateway compared to the older Remote Data Connector, given the pace of new features I'm sure any negatives will soon be fixed:

Positives:

- Very simple to install/configure

- No need to open up any ports in your local firewall

- All database connections are created in OAC, no need to touch the Data Gateway config

Negatives:

- Can't add a connection in the RPD yet You can in 105.4! Look here.

- No Windows service yet

Cheers, happy on-premises visualizing!